The Ethical Actuary—a Tautology: Never let it Become an Oxymoron!

By Dave Snell

Predictive Analytics and Futurism Section Newsletter, August 2021

I am proud to be an actuary! Our profession is one of the most highly respected ones in the world. In the United States, we are considered (with very rare exceptions, such as the Equity Funding scandal[1]) as paragons of virtue regarding financial calculations. Our motto has long been Ruskin’s famous quote that “The work of science is to substitute facts for impressions, demonstrations for observations.” In China, the term actuary, 精算师 (Jīngsuàn shī), literally means master of accurate calculations. A tautology is a self-evident statement, and the term ethical actuary clearly fits that definition.

Yet, as we embrace more and more of the roles and techniques of data science, artificial intelligence, and machine learning, we must be vigilant to ensure that we do not unknowingly jeopardize our reputation. Data science tends to use some more statistical techniques than actuarial science did in the past, and statisticians suffer from the disparaging moniker of “lies, damn lies, and statistics.” Historically, statistics had some embarrassing leaders, such as Francis Galton, the person credited with coining the term regression, which underpins regression analysis and its many extensions and variants. Galton, a distant cousin of Darwin, misused statistics to promote eugenics, and Hitler found the American eugenics movement an inspiration for his horrific ethnic cleansing atrocities.

Throughout the history of statistics, various people have perverted the presentation of facts to promote questionable or inappropriate viewpoints—sometimes on purpose, and other times inadvertently. I wish to discuss some of the latter cases in this article.

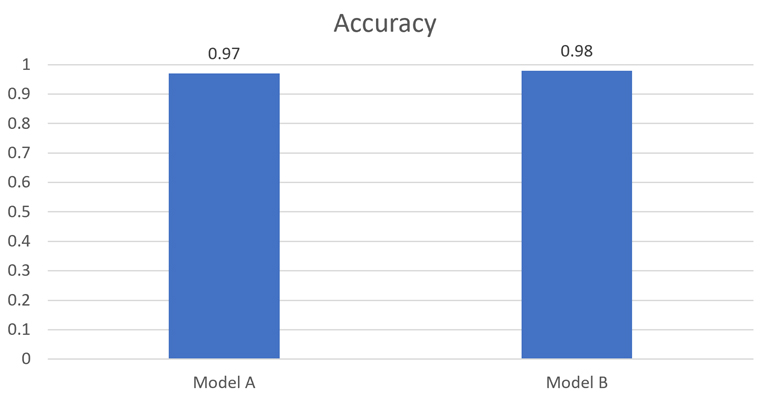

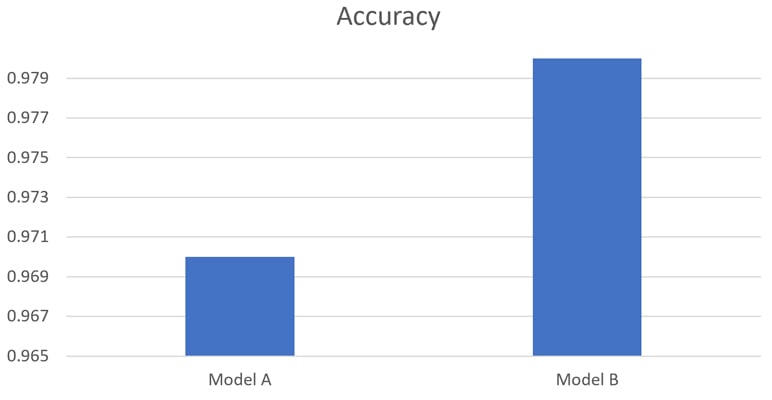

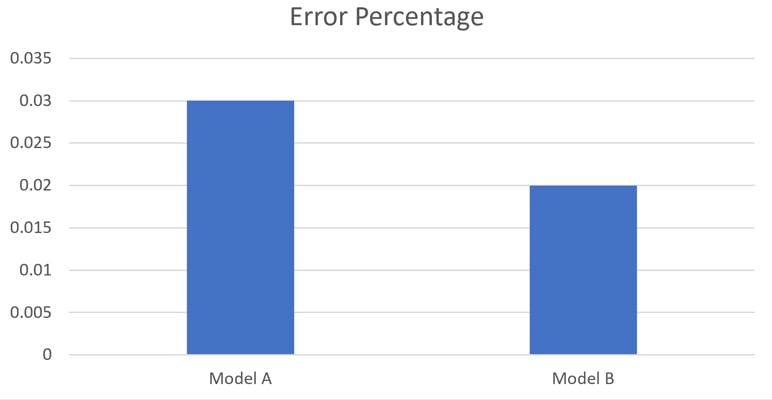

Let’s start with a very simple example. Assume that model “A” has 97 percent predictive accuracy and model “B’s” accuracy is 98 percent. We might plot the two as in figure 1, or as in figure 2. Figure 2 appears to make better use of space, but it distorts the visual presentation of relative accuracy. It is visually misleading. Yet figure 3 shows the same results from a different perspective and might be appropriate. Your perspective can frame a result as ethical or not ethical.

Figure 1

Comparing the Relative Accuracy of two Models

Figure 2

Alternate Presentation of the Relative Accuracy of two Models

Figure 3

Comparing the Relative Inaccuracy of two Models

Moving on to a slightly more complicated, but probably more common example, let’s examine the situation where we build a model, or test, that detects rare cancer “C” with 99 percent accuracy, meaning if 100 people with “C” undergo this test, 99 of them will be recognized as having “C” and only 1 person with “C” will slip through the filter. Tests with high sensitivity such as this may suffer from low specificity. If the test is applied to 10,000 people chosen at random, it might falsely predict that 1,000 of them have “C,” when only 10 actually have this disease. The test has been tuned to permit a lot of false alarms in order to minimize missing some true instances of “C.” We see this situation in prostate cancer testing for men, and in breast cancer testing for women (although men die from breast cancer as well). Given that the excisional biopsies, and even more invasive alternatives, carry a risk of adverse health and mortality effects, is it ethical to scare a patient into these procedures based on a test with overly high sensitivity?

As actuaries, we have a solid understanding of Bayes Law, so I will not belabor this issue. Let’s move on to machine learning, an area that actuaries are embracing with increasing zeal.

Machine learning is being utilized to do some amazing things. Convolutional neural networks (CNNs) can perform facial recognition to a very high degree of accuracy. They can also detect cancerous tumors on x-rays, assess damages for property claims via drone photos, identify smokers in pictures on social media websites, and read attending physician statements and electronic health records (EHRs) faster than a human underwriter can. Yet, the logic used is not as transparent as former methods, and sometimes they base their classification decisions on extraneous factors. In an experiment at the University of Washington, a CNN was used to distinguish between Huskies (dogs) and wolves; and it had high accuracy. But once the researchers investigated the logic used, they discovered the CNN was focusing primarily on the background instead of the animal.[2] If the background was snow, it assumed a wolf. CNNs are a special type of artificial neural network (NN) but all the various NNs suffer from a lack of transparency relative to many of our former predictive techniques. This poses another ethical dilemma—should we ignore the NNs and give up the increased accuracy they can provide, or utilize them for answers and then use more conventional methods such as generalized linear models (GLMs) to rationalize our answers? And even the GLMs are not immune from ethical issues. If we base a hiring system (or underwriting system) on historical data that has inherent gender or racial bias, all we are accomplishing is the automation of an existing bias!

A currently debated ethical example is the autonomous car. On the plus side, several test results and statistics have suggested that replacing the human driver with a model that has faster reaction time can save thousands of lives per year. I suspect that the insurance companies will help nudge acceptance of this safer mode of travel through a preferred rate structure. The faster reaction time though can introduce ethical issues. Whereas a human driver suddenly facing the prospect of hitting some innocent bystanders or veering off a road suffering damage to the car and the driver, may have just enough time to slam on the brakes and hope for the best, the model can assess the situation at silicon speeds and decide from a range of opportunities. What should it do? Researchers at MIT surveyed millions of people from 233 countries and territories for public opinion on this (“The Moral Machine experiment,” Nature, October 24, 2018) and not surprisingly, the responses overwhelmingly indicated a preference for the algorithm to protect the group of innocents. Yet in the same survey, these respondents indicated that they would not purchase a car that would consider the driver less important than the innocents. Supposedly, a marketer at Mercedes saw an opportunity here and declared that an autonomous car from them would incorporate an algorithm to give higher priority to the protection of the driver and passengers. The German government said no! You can read the guidelines in the article “Germany Has Created the World’s First Ethical Guidelines for Driverless Cars.”[3]

Here in the United States, several states, regulatory agencies, and legislators are raising similar concerns for insurance companies and the way that we may (or may not) utilize the tsunami of new data available through social media, wearables, embeddable devices, ubiquitous cameras, credit reports, etc. Following is a short summary (courtesy of David Moore, FSA):

New York DFS Circular Letter #1

This letter from the NY DFS has shaped the way companies are looking at using data for insurance underwriting by defining what is an adverse action, and by laying out principles that companies should use as guidance when using external data sources.

Proxy Discrimination in the Age of Artificial Intelligence and Big Data

This article is a detailed analysis of proxy discrimination, and how it can occur within an AI framework.

The Data Accountability and Transparency Act of 2020

This proposed legislation, just introduced by Senator Sherrod Brown, shows an example of what future regulation of data and privacy could entail.

National Association of Insurance Commissioners (NAIC) Principles on Artificial Intelligence (AI)

AI Principles recommended by the NAIC’s Innovation and Technology Task Force.

My point here is that we actuaries have enjoyed public trust for a long time; but the world is changing rapidly and our new toolkits bring both increased power and increased responsibility. If we do not address the ethical issues ourselves, then regulators will step in and impose restrictions upon us.

Your model becomes your reputation. An ethical dilemma can be a slippery slope. This can be existential for the company as government regulation and class action litigation could put a well-meaning firm out of business and might result in criminal charges for key management associates. Being ethical sounds easy, since we all seem to have an innate sense of morality; but in practice, it can be a challenge.

Many large companies will need to hire chief ethicists, as they currently hire heads of Human Resources, Public Relations, and Legal to help them avoid inadvertently causing unfair discrimination or even overt harm to humanity. This can be another opportunity for actuaries, based upon our innate knowledge of financials and our reputation for high integrity.

An oxymoron is a phrase that is internally inconsistent, like jumbo shrimp. I have no worries that “ethical actuary” will ever be viewed as an oxymoron; but let’s be aware of the many ways it could cease to be a tautology.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the newsletter editors, or the respective authors’ employers.

Dave Snell, FALU, MAAA, ASA, FLMI, CLU, ChFC, ACS, ARA, MCP, teaches Artificial Intelligence Machine Learning at Maryville University in St. Louis, MO. He was a member of the Ethics Committee at Cardinal Glennon Memorial Children’s Hospital for several years; and he passionately incorporates ethics into his AI classes. He can be reached at dsnell@maryville.edu.