By Mary Pat Campbell

Do you have Excel open? Put this in A1:

Do you have Excel open? Put this in A1:

=1*(.5-.4-.1)

What did you get? If you got zero, try asking Excel to show you more digits past the decimal point. Generally, though, Excel will revert to the following floating point notation as default:

-2.78E-17

To be sure, that’s small, but it’s not zero.

Separately, if you check out my spreadsheet from the January 2014 issue of CompAct, accompanying the article titled “Variations on Approximation—An Exploration in Calculation,” you will see that on some of the tabs, the cumulative sum of probabilities for the approximated binomial distribution are less than 1 (by about 10^-14). This may cause us trouble for certain applications of the distribution. The numbers calculated should add up to one, at least for the tabs not using approximations, if we were using exact arithmetic and pencil and paper. But it won’t in Excel.

Though my examples above deal with Excel, this is not unique to Excel. There are aspects to how Excel handles floating point in particular, but I want to start with the general problems and not go into excruciating detail. Merely annoying detail.

First, what is a floating point representation of a real number? It looks like this:

(+/-) Significand* baseexponent

Standard forms usually force that “Significand” part to be between 0.0 and 9.999999 (to the level of precision of the machine). The base will be specified (usually two for computers). Each system will have limits on how many significant digits there are (called the precision) and there will be limits to the exponent (positive and negative). There may be some asymmetry between positive and negative numbers so that the lowest negative number may not be equal in absolute value to the largest positive number in the system (similarly for the range of exponents).

For exaggerating the effect of arithmetic operations in floating point, I will be using some fairly low limits. I will allow up to four significant digits (will hold -9.999 to 9.999), I will allow exponents from negative three to four, and I will use base 10 for ease in hand-checking the operations.

Let’s check out some of the problems arising from floating point arithmetic:

- Overflow

- Underflow

- Loss of precision in converting into floating point

- Adding numbers of very different magnitudes

- Subtracting numbers of similar magnitudes

- Multiplying and dividing

Overflow

Overflow occurs when the number you are trying to express in floating point is too large in magnitude.

For our simple example, the largest allowable number is 9.999*10^4, or 99,990. Because numbers are generally rounded to the nearest number having the allowable significant digits, that means our number would have to round to 10.0* 10^4 for a number to overflow in this system.

Rounding and handling overflow differs by system. In general, overflow will be returned as infinity or NaN (i.e., not a number). If you overflow in the negative direction (having a number below -9.999 * 10^4, that is), that may return a similar error or there may be signed result, like –infinity. When Excel overflows, it generally gives a #NUM error.

In general, when overflow occurs, some kind of error is thrown. This is unlike the next type of problem.

Underflow

Underflow occurs when the number you are trying to express is too small in magnitude.

In our simple example, the smallest expressible positive number is 0.001 * 10^-3, or 10^-6. The number 10^-7 would underflow in this system.

(I want to note that underflow has a really persnickety definition, and I’m simplifying the issue for this reading. See the resources at the end of the article if you want full detail.)

Underflow as I’m describing it usually resolves as zero, and you are given no warning that this happened. Underflow occurred for many of my binomial probability examples in “Variations on Approximation” when the probability became smaller than 10^-308 (approximately), though sometimes it returned zero for larger numbers when calculating functions. The 10^-308 limit in Excel comes from double precision floating point calculation, and many of the native functions are in constrained areas and will underflow at 10^-16.

Loss Of Precision In Converting Into Floating Point

Let us convert the number 1/3 into our floating point system. The decimal is repeating: 0.333333...., and given our limits, we represent 1/3 as 3.333 * 10^-1.

How far off is that from its actual value?

The difference will be 0.0000333333..... or 1/3 * 10^-4.

We get this loss of precision all the time in our computing, because our numbers are being converted from decimal into binary floating point. Many things that look fine in decimal, such as 0.1 or 0.4, are repeating decimals in binary. In binary, 0.5 has a lovely representation: 0.1. So when we convert 0.1 and 0.4 into binary, losing precision thereby, and then try to subtract these from 0.5, we don’t get 0.

A good way to think about it is in terms of significant figures, and that the system imposes a ceiling on the number of sigfigs you’re allowed to have. That gives you an idea of how precision is lost in floating point operations.

Adding Numbers Of Very Different Magnitudes

So let’s do some actual arithmetic, and assume all the numbers are exactly represented before doing the operations (not necessarily the case in conversion from decimal to binary, as above).

What happens when we add 7.777*10^2 and 9.555*10^-1? That is, we are trying to add 777.7 to .9555.

In exact arithmetic, the answer is 778.6555. But that is way too many significant figures for our floating point system. We must round that to 778.7 for it to be in alignment with our system. The difference between the rounded answer and our exact answer is 0.0445. In relative terms, it’s a miniscule error, so maybe that doesn’t bother us.

But what should bother us is when we are doing something like adding up a bunch of numbers that are ultimately supposed to sum to one, but each is very small. This is what happened to us in doing the recursion relation for the binomial probabilities. At some point, we were trying to add a bunch of very low magnitude numbers to something that was close to one. Let us consider trying to add 0.010 * 10^-3 to 9.999*10^-1. That works out as: 0.9999 + 0.00001= 0.99991 = 0.9999 in our system. Now suppose we were trying to add this column of numbers:

| .9999 |

| .00001 |

| .00001 |

| .00001 |

| .00001 |

| .00001 |

| .00001 |

| .00001 |

| .00001 |

| .00001 |

| .00001 |

That should equal 1, but if we did it step-by-step, from top to bottom in pairs, each additional 0.010 * 10^-3 would be like adding zero to 0.9999.

So how to handle this?

In general, you handle this kind of situation by adding up the smallest numbers with each other. In general, you want to add numbers of similar magnitude together.

In a simple approach, you would add that column of numbers from the bottom to the top, and then they will add up to 1. Note that floating point addition is not associative. Isn’t that interesting?

A different approach would be adding each of these smallest numbers in pairs, and then adding those pairs to each other.

Tip 1: Whenever possible, add numbers of similar small magnitude together before trying to add to larger magnitude numbers.

Subtracting Numbers Of Similar Magnitudes

The problem here is a loss of significant digits. If you subtract 9.999 and 9.998, you get 0.001 or 1.000 * 10^-3. You start with four significant digits for the initial numbers and end with only one significant digit.

This issue is highlighted when those original numbers were converted from outside the floating point system (as happens with conversion from decimal to binary). So suppose that the original numbers were actually 9.999 and 9.997571. The exact difference would be 0.001429, or 1.429 * 10^-3 which is expressible in our floating point system. This is a bit of a difference from 1.000 *10^-3.

So what do we do?

Before we convert the numbers into our system, we subtract 9.997 from both. That gives us 0.002 = 2.000 * 10^-3 and 0.000571 = 0.571 * 10^-3.

Tip 2: Before subtracting floating point numbers, you may need to “massage” the original numbers or change your algorithm in order to not lose significant digits in subtraction.

Check out the Wikipedia link in the resources to see examples of loss of significance, most notably in the quadratic formula when b is large and 4ac is very small.

Multiplying and Dividing

The problem with multiplication and division is that of overflow and underflow.

I first ran into this when I wrote some Fortran code for probability distributions for a statistics professor. He knew this would occur, and recommended to me that when trying to calculate something like the binomial distribution for very large values of n, to try to multiply and divide numbers as close to one as possible, to keep from underflow and overflow, and the loss of precision that results when you get close to these limits.

Using our system, suppose you wanted to do:

(99990 * 19990 * 22220) / ( 11110 * 77770)

If we did it in a strictly left-to-right calculation, we’d overflow on our first operation. If we calculated it like this:

1/77770 * 1/11110 * (99990 * 199990 * 22220)

We would underflow on the first multiplication of the two reciprocals.

What you would want to do is rearrange the steps (there is more than one way of doing this arrangement to prevent problems):

(99990/77770) * (22220/11110) * 19990 = 1.286 * 2.000 * 19990 = 5.141 * 10^4 (in our system)

Tip 3: To prevent overflow and underflow (as well as loss of precision) when multiplying and dividing numbers, try to rearrange the product so that one is multiplying by numbers near to one.

Another item one can try is to turn products into sums using logarithms. Let us pretend our log (base 10) function operates similarly to our floating point system.

Then we get: log(99990) + log(19990) + log(22220) – log(11110) – log(77770) = 4.711

Exponentiating back, we get: 10^4.711 = 5.140 * 10^4

So we get a little loss of precision in this approach, but making that transformation may be easier than trying to shuffle terms around in a calculation.I tried both of these approaches in the binomial approximation problem with varying success.

Tip 4: Sometimes transforming your calculation by applying a function (such as logarithms) can remove the danger of overflow and underflow, though it may provide a new source of loss of precision.

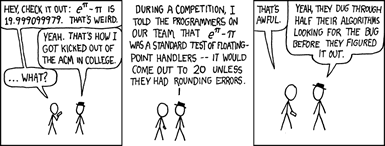

Source: xkcd website

For many (if not most) actuarial calculations, we have not come close to the kinds of situations that cause these problems. However, as we are called on to do more complicated numerical tasks, which is the direction many regulatory and risk management frameworks are going, we may run into these.

There are all sorts of cleverness people have applied to try to avoid floating point calculation problems. Check out the resources for some more detailed information on the kinds of problems that arise with floating point operations.

Resources and References

Prior related articles by the author

- “Variations on Approximation—An Exploration in Calculation,” CompAct, January 2014

- “Is This Significant—On Communicating Numbers and Fake Precision,” CompAct, February 2013

External resources

- “Loss of Significance,” Wikipedia

- “Floating point,” Wikipedia

- IEEE Standard for Floating-Point Arithmetic, IEEE Std 754-2008

- Lecture Notes on the Status of IEEE 754, Prof. W. Kahan, October 1997

- “What Every Programmer Should Know About Floating Point Arithmetic”

- “Five Tips for Floating Point Programming,” John D. Cook, October 2008

Mary Pat Campbell, FSA, MAAA, is life analyst for Conning Research & Consulting, Inc. She can be reached at marypat.campbell@gmail.com.