Actuaries, Information Theory and Exploiting a World of Surprises

It’s been said, the greatest technological breakthrough in communication and perhaps all of history was the invention of the telegraph. No longer do messages need to be carried from point to point by hand. So long as the infrastructure was in place, the messages could travel virtually instantaneously, and at little cost. The potential speed of information transfer went from a few miles per hour to the speed of light.

But what is communication? The Latin derivation suggests “to share” or “be in relationship with.” Miriam-Webster describes: “A process by which information is exchanged between individuals through a common system of symbols, signs, or behavior.” Specifically, how does the way we communicate with our data and applications potentially influence the way actuaries work? Put simply, actuaries are one part curator of information and another, purveyor of insight.

Modern Digital Information—Untangling Virtual Wiring

I like to say, between me and my smartphone, there is exactly one servant and one master. Though, at times, it may not be clear who’s whom. The Internet of Things, Big Data, social media, news feeds, YouTube, stock tickers … my brain is swirling in a constant feed of information. Parsing it, reorganizing it, collating it in a way that produces value and solves real world problems and answers real questions. All before even going into work. How do I manage it all? Why do I find so much more intensity of it all in the last 18 months? How do I cope with all the … noise?

“Information is the resolution of uncertainty.”—Claude Shannon

While a cursory search doesn’t verify these were the published words of Claude Shannon, the spirit of the phrase is consistent with the work. One of the great innovators of early computing, he is rightly attributed as the forefather of Information Theory. Certainly, in the sense that he coined the term and codified it in mathematical terms. You see, in one sense, Claude Shannon had a similar dilemma as the one we have today. He had a noise problem.

Analog Information—Untangling Real Wires

Shannon was an innovator, mathematician, engineer, in short a superstar for Bell labs in the ’40s. It was the very same Bell labs that produced and patented the first transistor around 1946–1947, dramatically improving the amplification of telecommunications signals, but subsequently boosting the noise as well. Background noise, a problem first introduced with the telegraph, was a problem Shannon was already hard at work on. By 1948 he introduced his landmark paper “A Mathematical Theory of Communication,” in it he describes:

The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem. The significant aspect is that the actual message is one selected from a set of possible messages. The system must be designed to operate for each possible selection, not just the one which will actually be chosen since this is unknown at the time of design.”[1]

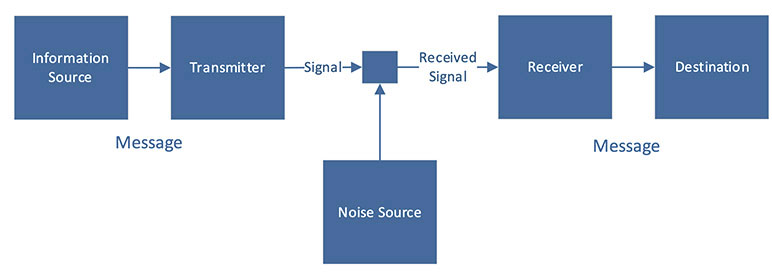

Shannon wasn’t solving a problem unique to only the limits of analog technology. Where or how the chosen message is distorted is irrelevant to both its content and its anachronistic architecture. Herein lies the timelessness of his work. (see Figure 1)

Figure 1

Claude Shannon’s Original Schematic Diagram of a General Communication System

“The beauty of this stripped-down model is that it applies universally. It is a story that messages cannot help but play out—human messages, messages in circuits, messages in the neurons, messages in the blood. … Those six boxes are flexible enough to apply even to the messages the world had not yet conceived of—messages for which Shannon was, here, preparing the way.”[2]

Core Components of Modern Information Theory

In the 1940s, new hardware technology like transistors essentially increased the power to overcome physical deficiencies. But just like a megaphone in a crowded room, there are physical limitations to physical technology. It’s far more effective to tone down the amplitude of the information you don’t what to gather. But how to decipher between the two? This is the crux of the information problem and birth of information theory. Its framework is useful to our understanding of information knowledge. Here are the core concepts.

Information Theory: The study of quantification, storage, and communication of digital information.

The Channel: The end-to-end information architecture itself.

Channel Content (Set A): The chosen, intended message specified by the sender. Specifically, this is existing business knowledge.

Entropy (Set B): Mathematically, the measure of the amount of uncertainty in a random process. In short, perceived noise. Shannon describes it as “unexpected or surprising bits.” The unexpected part of the message. Undiscovered business knowledge.

Now, the success of all information order hinges on one simple but key element: How to distinguish between the intended message in Set A from the “noise” in Set B. But, before we develop that, we should look more closely at what Set B actually is.

Curating Information Entropy and Surprisal

It was Shannon’s colleague, MIT mathematician John von Neumann, who initially coined the term because “no one knew what it meant.” Not to be confused with its thermodynamic cousin (of which it’s mathematically related), information entropy can simply be thought of as surprise.[3] Just like surprise, it’s the receiver that determines whether entropy is perceived as a good thing. Surprise at a 3-year-old birthday party may elicit a certain positive reaction. Surprise in an executive board room the day after earnings release, may be something else entirely. Either way, in the context of the actuarial professional, entropy represents new information and potential insight. What often appears as noise but may just be your company’s most valuable data asset!

In the actuarial space, channel content is defined by our expectation set. Most of us have bits and pieces of these swimming around in our heads, waiting for the opportune time to exclaim “that number doesn’t look quite right.” The reality is, if the actuarial department shared a network mind, there is an expectation for virtually all input, output and aggregate data going in and out of the department. It’s just not documented in a useable way! This needs to be defined and standardized as part of an actuarial metadata program (more to come on that). If we can define an expectation tolerance (both logically and numerically) for each and every piece of significant data flowing through our departments, we’ll be able to bifurcate channel content from entropy! Entropy should never be overlooked or simply excluded to a bin of errors. Chances are, it’s filled with everything from bespoke business knowledge to new insights. We must design a database asset to collect all the relevant outliers of our business shop. Possibly to review today for controls or apply machine learning concepts down the road.

Toward an Actuarial Thesis

In a part 2, I will illustrate how to apply information theory to improve data quality as part of model governance framework. The example is built on the following principles originally developed in the Claude Shannon thesis:

- Information is Stochastic

In the digital age, risk management relies heavily on information management. How long have we as actuaries viewed the information as deterministic state? We do so as a matter of physics, but what have we always been taught about information? Garbage in, garbage out!

A concise summary of Claude Shannon’s work on the theory might be: Information is probabilistic in nature. Even more precisely, information is stochastic in nature. In every message, stochastic rules dominate. For every “th” in a text string, there is a high likelihood the next letter is an “e” and so on. In the early days, chance entered the channel through noise and limitation of analog hardware. In today’s digital world, that’s almost never the case. The noise enters through the uncertainty of the data collection and interpretation process itself. It’s no longer an issue of physics, but epistemics!

- Redundancy Improves Message Quality

The optimizer in me suggests we streamline requests for information to save time, space or memory. But given modern capacity of technology, we should consider the case for the value of redundancy.

The solution to the problem of corrupted information transition is very much observable in our own DNA structure. Redundancy is literally everywhere! Shannon devised coding methods to reduce probably of lost information in the message. The message was self-validating.

This second principle should drive our model input data selection process. Information fields should “hang together” logically. Data should inspect itself dynamically, according to the DNA patterns (meta data) we provide it. Information selection and metadata can be designed to improve our own confidence in our actuarial work product!

- Entropic Noise Has Business Value

New knowledge must be Entropic. Put another way, information contained in the expected channel message is NOT new knowledge.[3] It only represents what is already known about our industry, business, and customers. New information is only gained through availability, discovery and unlocking of entropic information! In the actuarial world, this is simply the information we don’t expect or understand. In a word, surprisal! We should focus our data sourcing, management and retention on this principle. Don’t discard the noise!

Human creativity resolves order from chaos, harmony from dissonance and business knowledge from entropy. Just like an actuarial problem, information measures our chances of learning something new!

To be continued in part 2, “Data Quality and Information Theory.”

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the newsletter editors, or the respective authors’ employers.