Model Risk Management Case Studies: Common Pitfalls and Key Lessons

By Katie Cantor, Josh Chee and Joy Chen

Risk Management, February 2021

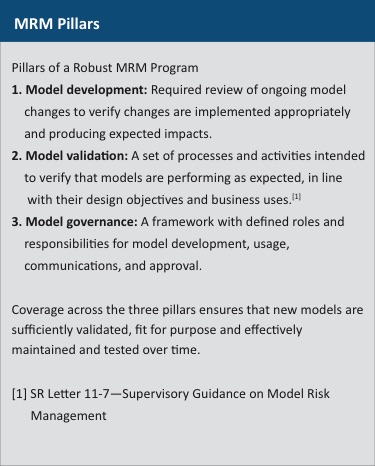

Actuarial models are foundational to the success and stability of life insurance companies. The 2008 crisis has heightened the profile of financial models across the banking industry and beyond and many life insurers have subsequently adopted an independent model risk management (MRM) function.

Meanwhile, the adoption of new and complex model-based reserving requirements, such as US GAAP Long Duration Targeted Improvements (LDTI) and US Statutory Principle-Based Reserving (PBR), have increased the need for robust validation of actuarial models, underlying assumptions and data.

Adopted December 2019 and effective after Oct. 1, 2020, Actuarial Standard of Practice No. 56 (ASOP 56) is the recent modeling specific ASOP and specifies the actuary’s responsibilities “with respect to designing, developing, selecting, modifying, or using all types of models.” ASOP 56 specifies considerations involving use cases, model structure, and data integrity, to name a few.

“A simplified representation of relationships among real world variables, entities, or events using statistical, financial, economic, mathematical, non-quantitative, or scientific concepts and equations. A model consists of three components: an information input component, which delivers data and assumptions to the model; a processing component, which transforms input into output; and a results component, which translates the output into useful business information.”—ASOP 56 model definition

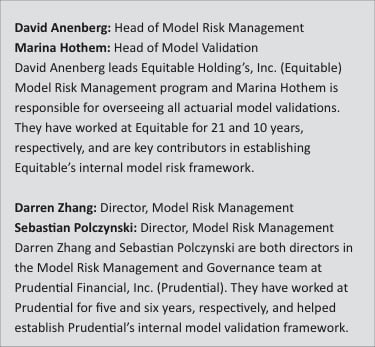

We connected with four model risk management industry experts (see sidebar 1) who have gone through the journey of establishing and executing a mature actuarial MRM function. This article provides their tips and tricks through three case studies that convey common issues MRM teams face.

We connected with four model risk management industry experts (see sidebar 1) who have gone through the journey of establishing and executing a mature actuarial MRM function. This article provides their tips and tricks through three case studies that convey common issues MRM teams face.

Case Study 1: Lack of Model Change Management

Gloria from Company ABC works in the model development team and is responsible for overseeing the development of all the life business valuation models. Company ABC is on a continual model development cycle and implements changes every quarter. Gloria’s team has implemented 10 model enhancement changes into a sandbox model that remove simplifications and fix errors. It’s already mid-November and this model needs to be used for year-end reporting in December. Management has been requesting these changes be implemented in the model for several quarters and Gloria does not want to let them down. Gloria has her team quantify the aggregate impact which show a $2million reserve decrease. Company ABC’s policy allows model owners to decide if a model change requires independent MRM review if it has less than $5million financial impact. Due to time constraints, Gloria elects to bypass MRM review and promotes the model to production for year-end reporting. Lo and behold, in February, auditors find that two of the changes were not appropriately implemented, but they had large offsetting impacts that were not clear in the aggregate impact analysis.

Question: How does your MRM program mitigate the possibility of this scenario occurring? How do you balance management pressure to continually improve models against applying proper change management rigor under constrained timelines?

David: Our MRM program would mitigate that possibility in a few ways through model change management. The modeling teams must perform testing on and document all changes including the model owner’s review, as ideally, they would catch any issues up front with robust testing beyond just aggregate impact quantification. The testing would include a reasonability assessment on the quantified impact, in particular a comparison to any prior expectations if these were known simplifications and errors for several quarters. For a large number of changes like this, they would either be split up to allow for more detailed analysis on each change or an impact attribution of each individual change would be provided, which could help reveal those offsetting issues. In addition, MRM is required to review all changes as an independent team, to ensure the testing and analysis was sufficient and uncover any residual material issues. Finally, the changes are presented to the Model Governance Committee for additional peer review and challenge before approval, and then put into production.

Marina: Also, as part of periodic model validations, we provide recommendations and sometimes even tools for streamlining the modeling processes and enhancing controls around them to reduce potential for error in the future. In addition, the model validation team maintains independent recalculation spreadsheet tools for our highest risk models to ensure no errors are made as part of the model change process in between the periodic full-blown model validations.

David: There is no perfect formula and our company is still working on this balance too. We strive for it by highlighting the need for effective prioritization, streamlining the governance requirements where appropriate and commensurate with risk, and simplifying and modernizing models and processes over time to facilitate changes and avoid duplicate work.

Case Study 2: Insufficient Validation Plan

Matt works in Company ABC’s valuation team and is responsible for the production runs for their new PBR model. Company ABC’s MRM had independently validated the PBR model in Q3 since it was given the highest MRM model risk rating. This will be the first time the PBR model is used for year-end statutory reporting. Matt completes the production runs well ahead of schedule and thinks to himself, “Well, that was a breeze. I love PBR!” However, two months later, the modeling development team stumbled upon a material model error. The model was correctly calculating reserves. However, the product rates input into the model for product ZZZ and product YYY were swapped. These products do not account for a material portion of the business, however, the rate swap significantly impacted results. Since ZZZ and YYY were immaterial, both on policy count and reserves, they were not included in the scope of the MRM’s input validation review.

Question: How is your MRM model validation framework structured to mitigate the risk of this situation occurring? How do your validation procedures differ based on your internal model risk rating scale?

Sebastian: Similar to most insurers, Prudential utilizes a three-lines of defense risk management framework. Under this framework, the starting point to mitigating/preventing input errors like this from occurring starts with the first line model owner who is required to have adequate controls, current documentation, and testing surrounding their model inputs. This significantly increases the likelihood that inputs and assumptions are correctly implemented within a model.

The first line is required to perform ongoing performance monitoring on their model’s outputs. Such monitoring would include detailed analysis of the period-to-period model output changes attributed to key input, assumption, or methodology changes. Any unexplained differences would lead to further investigation, which would likely identify any errors, such as the one found with products YYY and ZZZ. The second line will perform its own independent implementation review by utilizing various tests such as output analytics which examine ratios or trends using model outputs. This type of analysis is typically designed to identify abnormal model output behavior that is inconsistent with the in force and assumption characteristics. In the example, the significant impact from the input error indicates that the product rates vary significantly between YYY and ZZZ. An analysis on an implied ratio backed out by key model outputs and compared against source product rates would likely expose the misalignment between products.

Darren: The MRM function at Prudential (MRMG) utilizes a model risk assessment measure which is calculated based on the materiality, complexity, and assumption uncertainty of the model. Based on the calculated score a risk rating of high, medium, or low is issued. On an ongoing basis, the first line is required to report any material changes and the second line will periodically assess risk associated with model changes made since the model’s last certification for medium/high risk models. Models with material changes will be scheduled for full re-review and recertification.

Case Study 3: Lack of Stakeholder Buy-In

Richard just joined Company ABC and has taken over the model owner role for all the indexed annuity models. His prior role was in model development at Company No-MRM where, you guessed it, they did not have an MRM function. Company No-MRM had rigid first line of defense policies that required comprehensive testing and validation prior to promoting any models or changes through to production. When Richard joined Company ABC, he was immediately opposed to the MRM function as he viewed it as redundant and an inefficient use of his time. Furthermore, Richard has been communicating to Company ABC’s leadership team that the MRM program adds unnecessary overhead without significantly reducing risks, because they could simply add more rigor to their first line of defense testing.

Question: What are some of the keys to your MRM function successfully gaining the buy-in of all stakeholders (e.g., modelers through to senior management)? How do you find the right balance between rigorous and robust MRM policies without creating burdensome overhead for the model owners and production teams?

Marina: The model validations performed by our MRM team have produced a significant number of findings and valuable observations that have contributed to increasing buy-in from different stakeholders. We have a team of MRM resources dedicated specifically to model validation who are truly independent of model development. In addition to identifying errors and simplifications, many of our validation reports include quality assessments of model design and maintenance as well as conceptual analysis of model fit-for-purpose. The modeling teams use conclusions from our validation reports to improve the model accuracy and reduce potential for future error. The model validation reports also inform senior management of the weaknesses in the models, which facilitates better decision making on the allocation of the efforts and resources for model modernization, process and documentation improvement, and strengthening first line controls initiatives.

David: I agree that helping modelers succeed is a great way to achieve their buy-in. I’d add that through model change reviews we can also help them avoid some work down the road with management or auditors, by suggesting ways to improve their support or asking questions as an outsider to strengthen their explanations. We’re ultimately all on the same team, just different lines of defense—a soccer team playing with too many forwards may score a lot of goals but could let in even more!

Darren: The key for our MRM function to successfully gain buy-in from all stakeholders starts with strong leadership and a clear vision. Having a vision that is communicated by the CRO makes it much easier for others to buy-in when it’s clear what the end goal is. At Prudential, our senior management value the importance of having a centralized and independent second line model risk control function. One of the keys to our model risk group gaining successful buy-in is through our Model Governance Council that has senior leadership representation from actuarial, finance, investments, and risk. The council provides a forum for discussion, by senior management and subject matter experts of issues related to model risk governance and controls. Most importantly, getting buy-in from the first line is critical to our success. This starts with building a successful relationship between the first and second line. We strive to be seen as a value-added partner and avoid having an adversarial relationship with the first line.

Sebastian: It is also important to address model owner’s concern of “redundant and an inefficient use of his time.” We would highlight three points here that should be clearly communicated: 1. The model governance process should be implemented into the model developmental life-cycle, rather than retroactively performed at the end of model development, which would undermine the effectiveness and perceived value of the control; 2. MRM team should collaborate with other existing oversight functions, such as internal audit, to avoid duplicated efforts and inconsistent standards; 3. Second line MRM review strategy should be risk-based and designed on top of existing first line testing to avoid repeating the same work that does not add any value.

Key Lessons Learned

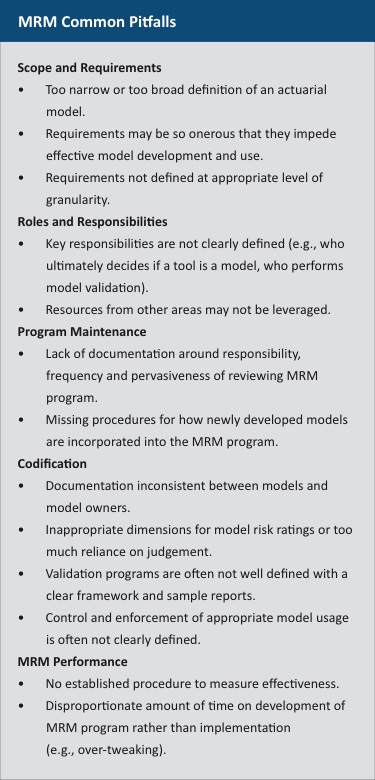

Across the case studies three key lessons apply that should be kept in mind to run an effective actuarial MRM program:

- MRM team is not a substitute: The presence of an MRM function is not a reason to decrease testing rigor at other lines of defense.

- Commensurate rigor with risk: Balance the level of rigor based on the inherent actuarial model risk to avoid burdensome overhead with marginal value.

- It takes a village to effectively manage model risk: We are all on one team. Cultivating a risk-based culture, with buy-in at all levels within an organization, will ultimately drive the success of any MRM program.

The views or opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of Oliver Wyman, or of the Society of Actuaries.

Katie Cantor, FSA, MAAA, is a partner at Oliver Wyman. She can be reached at katie.cantor@oliverwyman.com.

Josh Chee, FSA, MAAA, is a senior consultant at Oliver Wyman. He can be reached at josh.chee@oliverwyman.com.

Joy Chen, FSA, CERA, is a senior consultant at Oliver Wyman. She can be reached at joy.chen@oliverwyman.com.