GAAP Long-Duration Targeted Improvements: Whether Largely a Compliance or Modernization Exercise, the Considerations for the Modeling Actuary are Numerous

By Dave Czernicki, Ryan Laine, Jean-Philippe Larochelle and Cassie He

The Modeling Platform, April 2021

The Accounting Standards Update (ASU) No. 2018-12, also referred to as “Targeted Improvements to the Accounting for Long-Duration Contracts” (LDTI) amends existing accounting requirements under generally accepted accounting principles (GAAP) for long-duration contracts. For U.S. reporting companies, LDTI is one of the most significant accounting framework changes in decades, impacting virtually every functional area within the company.

The actuarial modeling process is no exception; implementing changes of this magnitude requires careful assessment of the pending requirements, measured coordination with associated in-flight efforts, and collaboration across accounting, finance, actuarial, and information technology (IT) departments.

In particular, modeling actuaries must navigate a confluence of changes to the models, including increasingly complex calculations, new data sources, new reporting requirements, and integration of functionality being rolled out by actuarial software providers. Further, a company’s particular set of circumstances, such as being a public or private entity, or the degree of internal resource capacity, can influence the degree by which LDTI will be more of a compliance exercise versus an opportunity to modernize.

This article explores some key challenges facing the modeling actuary related to LDTI implementation and shares associated compliance and modernization considerations.

Migrating Toward a Defined, yet Adaptive, Future State Vision

Establishing a clear future state vision of a company’s end-to-end process for financial reporting and translating this vision into a strategy and tactical execution blueprint is critical for successful LDTI implementation. This is true regardless of whether companies approach LDTI as a compliance exercise, aiming for “minimum viable product,” or as an opportunity to modernize. A key question to answer at the forefront of an LDTI implementation is what scope should be, from a modernization perspective.

Over the past two years, a number of companies have formulated road maps for their LDTI journey, with their modernization scope defined, and are currently in the midst of execution. During this time, the industry had to navigate around a number of moving targets, such as an evolving interpretation of LDTI guidance, changing timelines for go-live date, and evolving industry approaches toward implementation. Companies must then plan and implement adaptively, in an agile fashion, prioritizing or backlogging aspects of development based on new information revealed along the way. Companies should consider taking stock of current future state vision and blueprints and determine if refinements are required. For example, a number of companies with in-flight model conversions had to make conscious decisions to keep portions of their business on legacy actuarial software given tight implementation timelines. With an expanded implementation horizon, possibilities open up to convert more models to the future state platform and increase the ceiling on transformative benefits to the reporting process. At the same time, the industry continues to make substantial progress in interpreting and deliberating the implications of LDTI; therefore, a company’s requirements may have evolved, and your modeling actuaries may have a better understanding of the intricacies of LDTI modeling based on learnings from the past two years to incorporate into your implementation plan.

Taking an adaptive approach, such as agile, or even just periodically reviewing your strategy and tactical blueprint, can help your company realign priorities across the functional areas, and reduce the operational risk of some areas falling behind others. This effort also opens up an opportunity to assess the latest tools and technologies that can be valuable on a look-forward basis.

Lastly, modeling actuaries must take an active stance in contributing to the vision and design of a company’s post-LDTI world. Actuarial models are a key part of the end-to-end LDTI reporting process, and the quality of the model and associated infrastructure will have a direct impact on the efficiency and effectiveness of the end-to-end process.

End-to-end Considerations: Not Your Grandfather’s Financial Modeling Process

Over the past decade, actuarial models grew increasingly complex due to regulatory changes and greater demands for value-add outputs (e.g., forecasting). Vendor-based actuarial systems reacted with new capabilities, including process automation, data warehousing solutions, cloud-based ecosystems, and systems integration capability through application programming interfaces (APIs). Due to this new technology, actuarial modelers can no longer “fly solo” from other functional areas such as IT, due to considerations around runtime, data, and controls.

LDTI will further stress the actuarial modeling process due to new data requirements (e.g., historical actuals), increased data volume, enhanced disclosures, and more sophisticated analysis. Regardless of whether a company treats LDTI as a compliance exercise or an opportunity to modernize, it must consider the full end-to-end elements of the post-LDTI world now, and design processes that meet regulatory and its own strategic business needs.

The following sections provide some key end-to-end modeling considerations the modeling actuary should be wary of. The degree to which companies may choose to adopt or avoid these concepts depends on the companies’ considerations around compliance and modernization.

Consolidation and Centralization

LDTI prompts considerations around the consolidation of actuarial models and centralization of the actuarial modeling processes. Many aspects of LDTI makes a centralized process more favorable relative to a decentralized process:

- “Single source of truth” database solutions: The actual historical transactional data will feed into both actuarial models and the financial ledger. Companies that have a “single source of truth” database solution will be able to more effectively implement efficient controls and governance compared with a decentralized data solution. Some companies are taking LDTI as an opportunity to evaluate newer data solutions, for both the relational and non-relational database management systems.

- Consolidate and standardize to reduce complexity: For most companies, LDTI is expected to be more complex than current GAAP, with more onerous reporting requirements. Companies should look for opportunities to standardize inputs into models and minimize back-end transformations on outputs from models. Companies should also consider consolidating actuarial models, where there are synergies in products or approach, to reduce future ongoing maintenance efforts.

- Future operating model: LDTI will stress existing operating models, with a heavier reliance on actual transaction data, increased assumption governance requirements, and increased calculation complexity. Companies should assess future operating models, including the staffing model and associated mix of required skill sets, to evaluate how to best support future modeling efforts. For example, if a company adopts an LDTI consolidated model, then a single modeling center of excellence can cut across product lines and business units, including a greater presence of IT and data-oriented resources.

Systems Integration and Automation

Insurers can gain significant operational efficiencies by incorporating well-designed automation in the end-to-end process. The following are some specific automation opportunities that modeling actuaries should consider:

- Data staging and preparation: Streamlining or removing manual processes required for the model inputs, such as in-force inventory and assumption data, can directly improve the ability to automate and control front-end processing. It is also important to capture any incremental historical or transactional data requirements that will be required for back-end reporting, making sure these are processed and warehoused properly for eventual consumption.

- Disclosures: More detailed financial disclosures are required under LDTI, leading to an increased reliance on sets of model projection output and transaction data. Thought should be given as to how to stage components of the end-to-end process to best accommodate disclosures. Much of the heavy lifting can be handled using the actuarial software platform, via various batching or process automation capabilities. Ideally, designing a “one-click” model runbook would be the goal, and design analysis should be given to determine which parts of the end-to-end process can accommodate the demands. In-house aggregation tools may be needed to aggregate disclosures for the financial statements.

- Integration-centric workflow: When designing future processes in a post-LDTI world, some companies are looking into the use of work orchestration applications to automate and control sets of processes, with goals to create low-touch or no-touch end-to-end processes. Newer generations of these applications often have capabilities to use APIs to integrate different systems (e.g., data warehouse to actuarial modeling system), which can enable automatic data flow among networks of tools. These workflow tools can provide a standardized and controlled model execution process that can be used for period-end reporting for LDTI and other use cases.

Advanced Analytical Capabilities

The following processes can be leveraged to gain more efficiency and value from the LDTI efforts:

- Dashboard reporting: The desire for on-demand dashboard-style reporting is not a new development per LDTI, but the new reporting requirements are furthering demands for these capabilities. A foundational element of this from the modeler’s perspective is to make sure the actuarial modeling system is producing the proper metrics at the proper level of grain to accommodate all back-end reporting needs. This may require some analysis amid the implementation to map new reports and associated line items to variables resident in your financial models to make sure the data is there. For example, reports such as sources of earnings will look different under LDTI lenses, and companies will need to consider the shape and feel of these reports early on to ensure that the requirements are captured in actuarial models. On top of that, once the reporting data is at the right level of detail, some companies have looked to move away from bespoke spreadsheet or database tools to the use of more powerful business integration (BI) toolsets when formulating analyses and associated reports. Actuarial modelers often need to work in close contact with valuation, IT, and other areas to develop these BI reports.

- Artificial intelligence and machine learning (AIML): The increased complexity associated with the calculation of GAAP balances puts a strain on processing time to some degree for valuation purposes, but even more so when companies aim to forecast GAAP financials. Traditional brute-force nested stochastic techniques are fraught with runtime/granularity tradeoffs that tend to hamper the quality and actionability of associated analyses. We have recently observed consideration of AIML techniques as a mathematical alternative to traditional Monte Carlo simulation techniques, providing a tremendous speed pick-up without sacrificing accuracy. Application to use cases that forecast LDTI, such as financial planning, pricing, or forecasting, may help enable the fidelity and “speed to market” a modeling actuary can expect from these analyses on a go-forward basis.

Testing and Validation: Gaining Comfort Without a Point of Comparison

Actuarial modeling functions are no strangers to testing their models. Mature organizations with established first and second lines of defense have time-tested protocols and procedures in place to manage model risk via change management, as well as performing periodic review and inspection of in-production models. LDTI, like PBR, brings a unique challenge to the actuarial modeler when testing and validating a model. The actuarial modeler does not have any readily available challenger models (e.g., legacy models) or validation tools to grab off the shelf to help test and validate these newly built LDTI models. In addition to testing and validating a new measurement model, the actuarial modeler has new data elements and assumptions being fed into the model that have not been tested yet and will require additional procedures and approaches.

In lieu of having legacy model results to validate LDTI against, the actuarial modeler can develop single-cell replication tools and mock disclosures as a form of independent validation of the new calculations performed by the model. Performing a waterfall-type attribution analysis from current GAAP to LDTI on the aggregate results is a viable method to validating and explaining the new LDTI results to management and auditors. These testing procedures typically require resources with a more specific skill set (e.g., LDTI expertise to build independent tools) and require a greater amount of effort to perform than testing against legacy results. Thus, the actuarial modeler should ensure leadership is aware of these testing challenges, so they are appropriately reflected in the LDTI implementation road map.

As part of the project planning phase, the actuarial modeler should develop a complete testing plan, including acceptance criteria to be used as tollgates for each phase of testing, and ultimately deem the end-to-end model fit for production go-live. No actuarial modeler wants to find themselves approaching the go-live date and not being able to get user acceptance of the model because of underestimating the testing required to get these models to go-live. Generally speaking, there are four key benefits of having a comprehensive test strategy covering all phases of testing:

- Mitigation of financial reporting risk,

- increased predictability and quality,

- reduction of overall costs of testing, and

- demonstration of audit compliance.

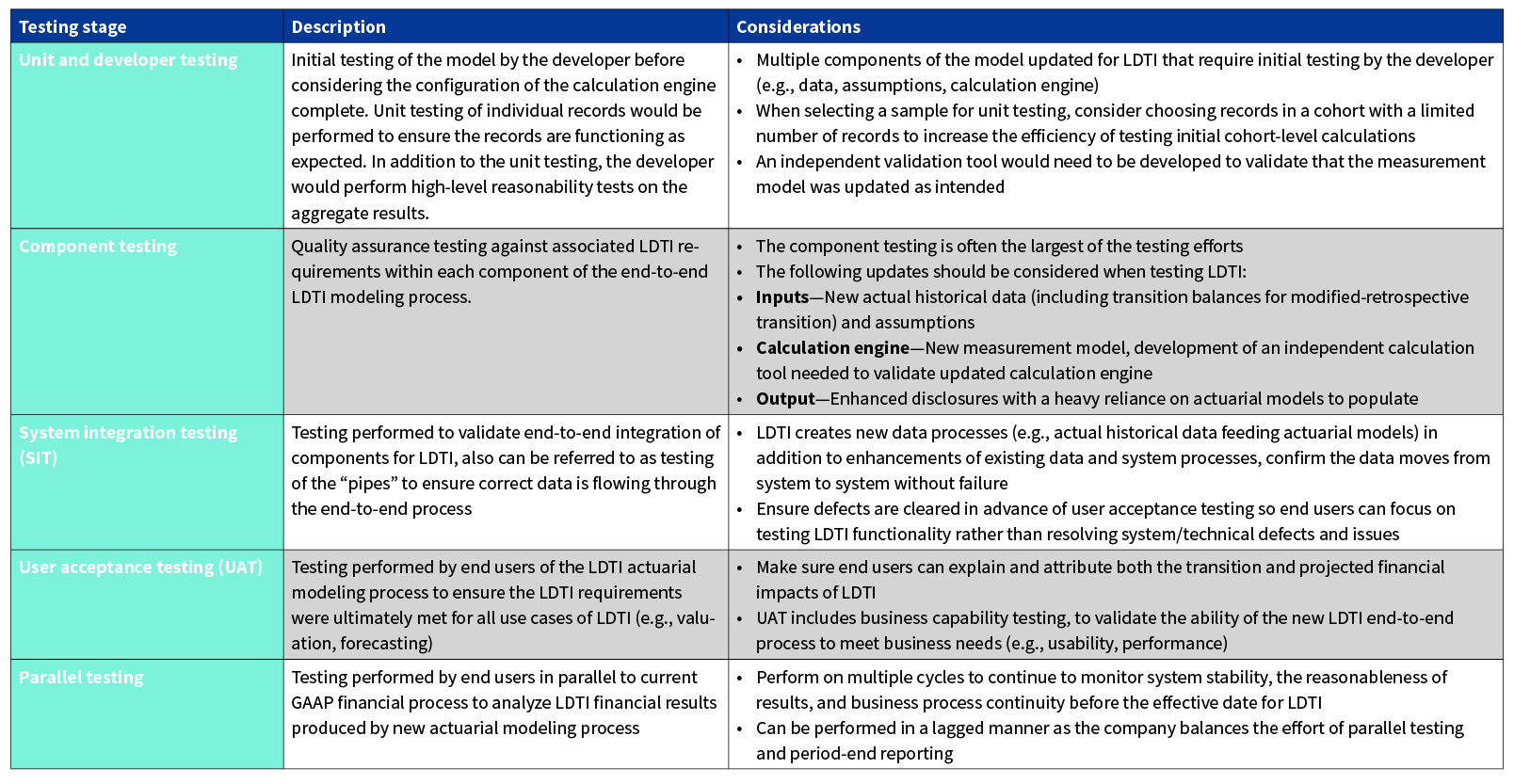

A well-designed test plan covers the entire end-to-end process of the model. The table below provides a high-level summary of generalized stages of testing that are often employed to assure the quality of the LDTI model(s), considering all aspects of the end-to-end process:

Each testing stage should have clearly outlined activities and acceptance criteria agreed upon before testing begins. This provides the ability to communicate the progress of testing to management and determine when success is achieved for each stage of testing. The actuarial modeler will most likely have to develop independent calculation tools to validate the new LDTI calculations. Planning for this effort early on during the planning phase will ensure these tools are ready when testing needs to be performed. The actuarial modeler should also consider providing an ample amount of time for UAT. The baselining effort to explain transition impacts from current GAAP to LDTI results can be more significant than a model conversion.

Putting it all Together

LDTI has presented some interesting challenges to the end-to-end modeling process, including considerations beyond simply updating the calculation engine. LDTI will demand more from the actuarial modeling process on a go-forward basis, which will increase the pressure on the company’s ability to effectively manage the modeling environment. By proactively enhancing your future state modeling vision, the full end-to-end modeling process beyond the calculation engine, and developing a strategic approach toward testing, companies can put themselves in a better position to successfully implement LDTI.

The views expressed in this article are solely the views of Dave Czernicki, Ryan Laine, Jean-Philippe Larochelle, and Cassie He and do not necessarily represent the views of Ernst & Young LLP or other member firms of the global EY organization, or the Society of Actuaries. The information presented has not been verified for accuracy or completeness by Ernst & Young LLP and should not be construed as legal, tax, or accounting advice. Readers should seek the advice of their own professional advisors when evaluating the information.

Dave Czernicki, FSA, MAAA, is a principal at Ernst & Young LLP. He can be reached at dave.czernicki@ey.com.

Ryan Laine, FSA, CERA, MAAA, is a senior manager at Ernst & Young LLP. He can be reached at ryan.laine@ey.com.

Jean-Philippe Larochelle, FSA, CERA, is a senior manager at Ernst & Young LLP. He can be reached at jeanphilippe.larochelle@ey.com.

Cassie He, FSA, MAAA, is a manager at Ernst & Young LLP. She can be reached at cassie.he@ey.com.