Regulatory Outlook for AI in the Insurance Industry

By David Sherwood and Emily Li

Risk Management, October 2023

Artificial intelligence (AI) and machine learning (ML) are rapidly transforming the insurance industry. These technologies offer insurers the opportunity to improve efficiency, reduce costs, and provide better customer service. However, the use of AI/ML also raises new risks and faces regulatory challenges.

Governments and regulatory bodies around the world are working to develop frameworks for the responsible use of AI/ML in the insurance industry. These frameworks are designed to protect consumers from bias and unethical use of AI, while still allowing insurers to use these technologies to improve their processes. At a high level, the current regulations contain the following specific areas of focus:

- Data protection: Insurers need to ensure that they collect and use data in a fair and transparent way.

- Algorithmic transparency: Insurers need to be transparent about how AI/ML models are developed and used.

- Bias mitigation: Insurers need to take steps to mitigate the risk of bias in AI/ML models.

- Ethical use: Insurers need to use AI/ML in an ethical and responsible way.

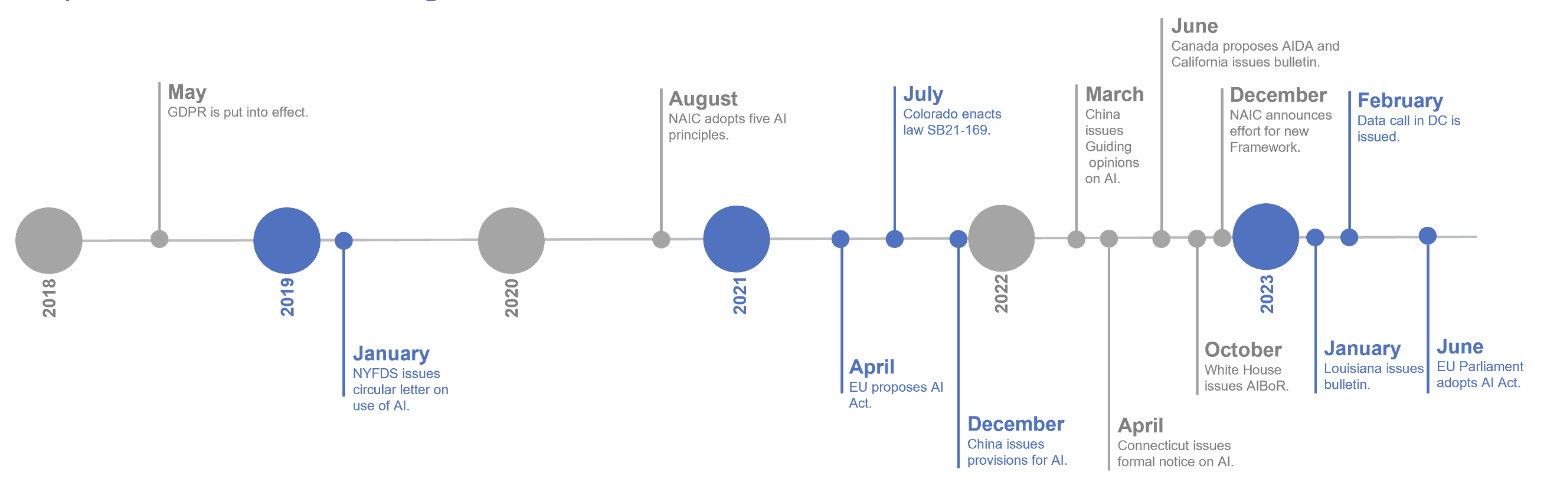

The regulatory landscape for AI/ML in the insurance industry is still evolving. However, it is clear more regulatory activities are occurring in the coming years and will play an important role in ensuring the use of AI/ML benefits the customers and the industry as a whole. Graph 1 provides a timeline of AI/Ml related regulations, which shows an increasing volume and velocity of such regulations.

Graph 1

Timeline of AI/ML Regulations

The growing regulatory landscape for AI/ML means that insurers need to be aware of the regulatory requirements that apply to their use of these technologies. Insurers also need to be prepared to demonstrate that they are using AI/ML in a fair, transparent, and ethical way through enhanced internal risk management and governance process on the development, implementation, use, and disclosure of these tools.

Current Regulatory Snapshot

United States

Although there are no federal level AI/ML regulations in the US, a number of state and sector-specific regulations that apply to the use of AI/ML are in place to provide regulatory guidance in the use of AI/ML tools.

NAIC

In August 2020, the National Association of Insurance Commissioners (NAIC) published the "Principles of Artificial Intelligence," broadly setting the regulatory tone for AI in insurance with five key principles. These principles are based on the Organization for Economic Co-operation and Development's (OECD) AI principles that have been adopted by 42 countries. The five principles are as follows:

- Fair and ethical: AI actors should respect the rule of law throughout the AI life cycle. AI actors should proactively engage in responsible stewardship of trustworthy AI in pursuit of beneficial outcomes for consumers and to avoid proxy discrimination against protected classes.

- Accountable: AI actors should be accountable for ensuring that AI systems operate in compliance with these principles consistent with the actors’ roles, within the appropriate context and evolving technologies. Companies must maintain a retention of data system supporting final AI outcome.

- Compliant: AI actors must have the knowledge and resources in place to comply with all applicable insurance laws and regulations, and compliance is required whether violations would be intentional or unintentional.

- Transparent: AI actors must have the ability to protect confidentiality of proprietary algorithms and demonstrate adherence to individual state law and regulations in all states where AI is deployed. Regulators and consumers should have a way to inquire about, review, and seek recourse for AI-driven insurance decisions in an easy-to-understand presentation.

- Secure/safe/robust: AI systems should be robust, secure, and safe throughout the entire life cycle in conditions of normal or reasonably foreseeable use; AI actors should ensure a reasonable level of traceability in relation to data sets, processes, and decisions made during the AI system life cycle.

The principles above hold AI actors responsible for the creation, implementation, and impact of their models while ensuring that their risk management approach applies to each phase of the AI stage and adheres to existing laws and regulations.

In late 2022, the Innovation Cybersecurity and Technology (H) Committee announced an effort to adopt a more specific regulatory framework for the use of AI. This framework will be designed to address bias and unfair discrimination in AI systems through principles-based, high-level guidance.

In July 2023, the H Committee of NAIC released an exposure draft of the model bulletin on the use of AI by insurers. This draft outlined the insurance regulators’ expectations in AI use and governance. It is principles-based and encourages, but does not mandate, insurers to develop and implement a written program for the use of AI, predictive models, and algorithms to make sure their use does not violate existing legal standards, such as the unfair trade practice laws or other applicable legal standards. It does not create new regulations.

The States

As of date, 11 states have enacted AI legislations, and eight more have proposed AI legislations. Graph 2 provides a snapshot of the legislation status by states.

Graph 2

Legislation Status by State

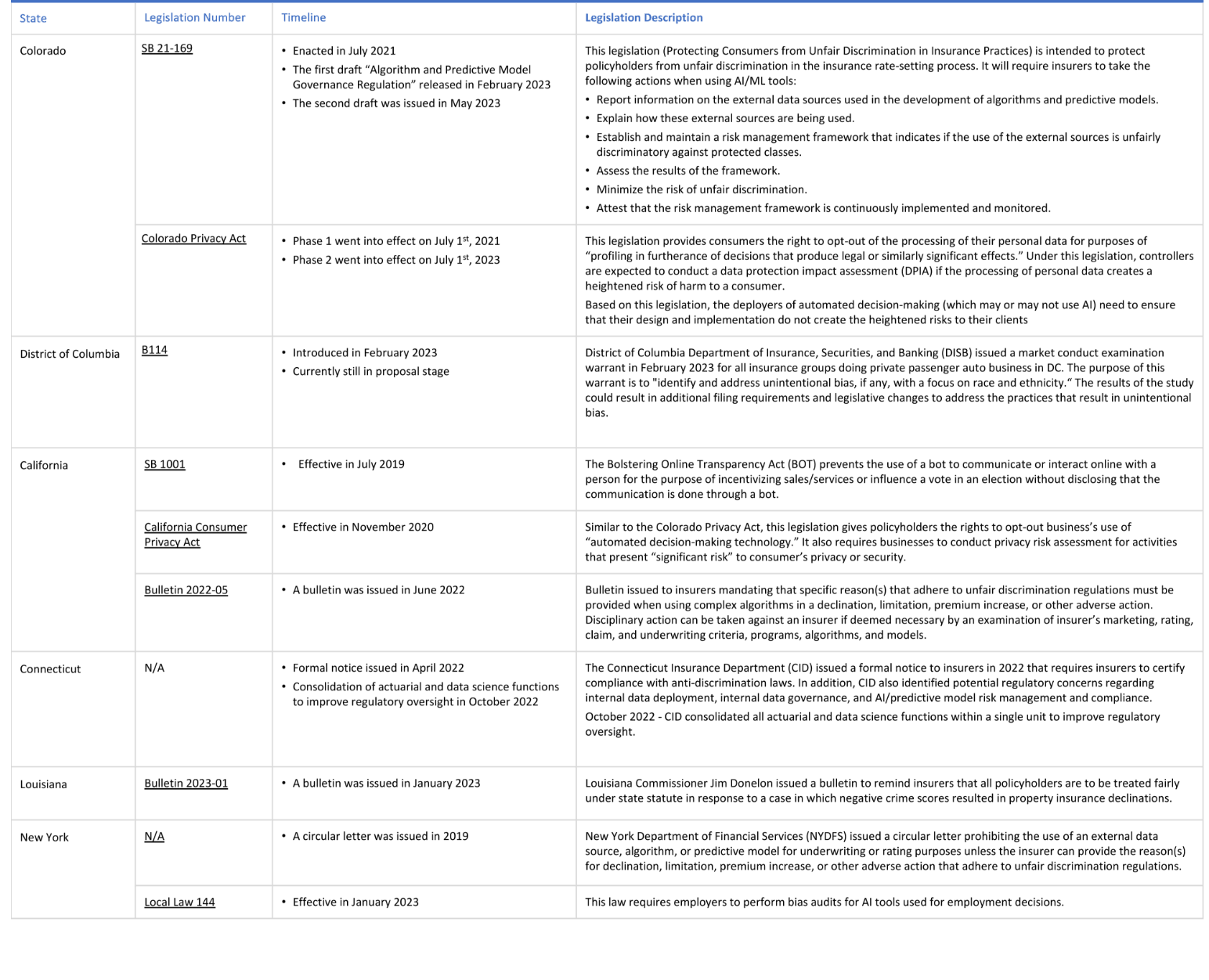

Table 1 illustrates the legislations that have been enacted or proposed in the six states that have been active in regulating the use of AI/ML models.

Table 1

Enacted or Proposed Legislations

The White House

The White House Office of Science and Technology Policy (OSTP) released an AI Bill of Rights (AIBoR) in October 2022 to provide guidance to organizations for an ethical use of AI. This Bill of Rights contains five principles:

- Safe and effective systems: Protect against inappropriate or irrelevant data usage through testing, monitoring, and engaging stakeholders, communities, and domain experts.

- Algorithmic discrimination protections: Protect against discrimination by designing systems equitably and making system evaluations understandable and readily available.

- Data privacy: Protect against privacy violations by limiting data collection and ensuring individuals maintain control of their data and how it is used.

- Notice and explanation: Provide clear and timely explanations for any decisions or actions taken by an automated system.

- Human alternations, consideration, and fallback: Provide opportunities to opt out of automated systems and access to persons who can efficiently remedy (Eliminate the use of this term or contact risk management.) problems encountered in the system.

In addition, the National Institute of Standards and Technology (NIST) published the “AI Risk Management Framework” in January 2023 to help organizations manage the risks associated with AI. The framework outlines the principles of trustworthy AI, which are consistent with the principles outlined by OECD. In addition, this framework also provides four core functions that contain specific actions and outcomes to manage AI risks and develop trustworthy AI systems.

- Govern: A culture of risk management is cultivated and present.

- Map: Context is recognized, and risks related to the context are identified.

- Measure: Identified risks are assessed, analyzed, or tracked.

- Manage: Risks are prioritized and acted upon based on the projected impact.

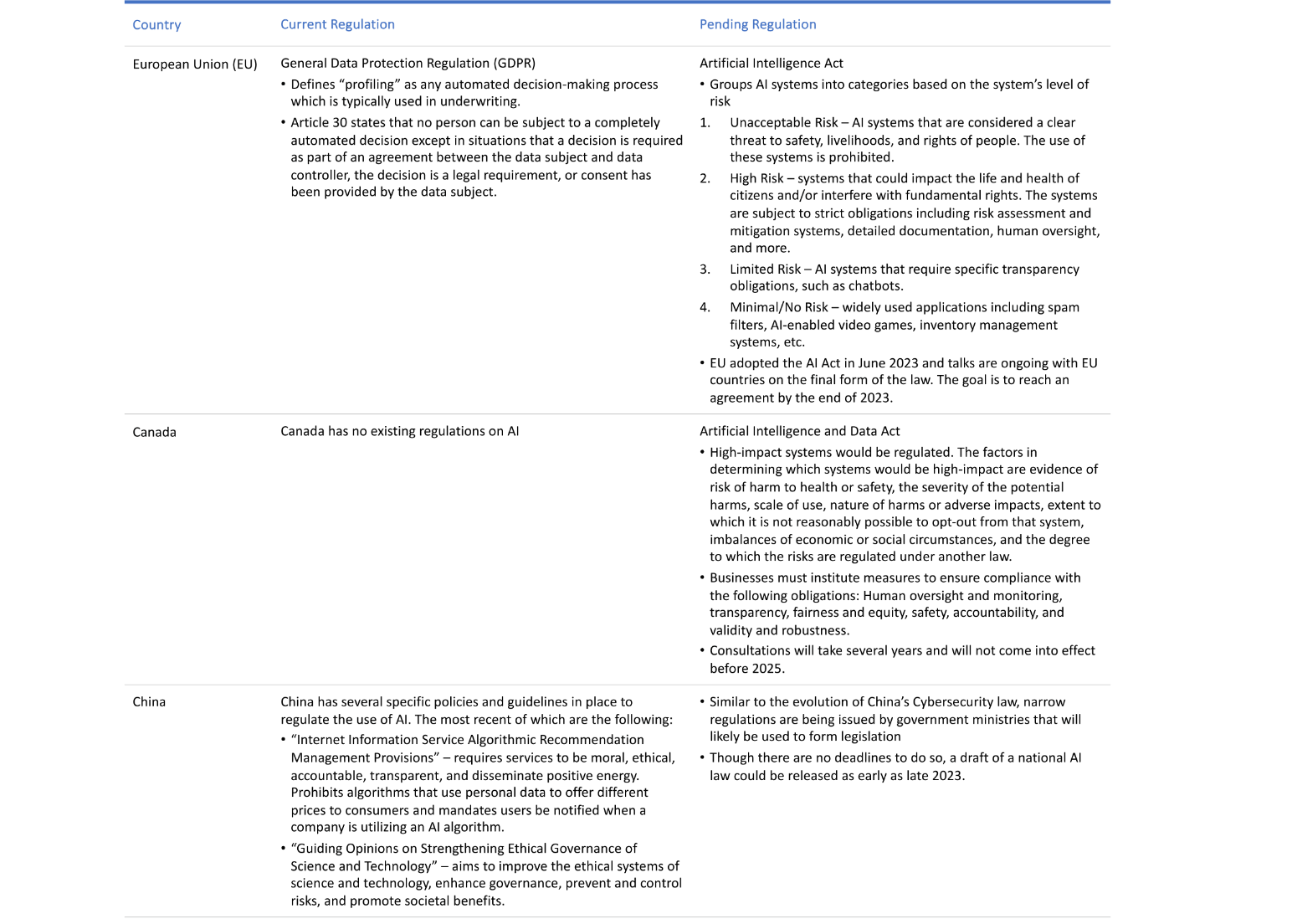

Other Countries

In addition to the United States, other countries have also taken steps to mitigate the potential risks associated with AI in insurance. Some countries are building upon existing regulations, while others are introducing new AI regulations. These countries include those in the European Union (EU), as well as Canada and China. Table 2 outlines the current and pending regulations in place for these countries.

Table 2

Current and Pending Regulations Worldwide

Conclusion: It is the Time to Buckle Up

As the use of AI in the insurance industry grows, so too does the risk in AI-powered decisions. While there are no standardized AI risk management and testing protocol yet, it is only a matter of time before they are developed. Insurers should start preparing now by implementing governance and frameworks to identify, measure and manage AI risks, such as bias, algorithmic opacity, cybersecurity vulnerability and lack of accountability. The following activities could be leveraged to ensure that AI is used in a fair and ethical way.

- Frequent monitoring of legislations and guidance principles from regulators.

- Training employees on AI risks and the ethical use of AI.

- Validating the AI tools with proper objective that balances model performance metrics, business performance metrics and the ethical use of AI.

- Developing on-going monitoring systems to test AI outputs for potential bias from various sources (e.g., data, algorithm, interpretation).

- Being ready to respond to consumer complaints about potential bias.

- Improving AI interpretability and explainabilities through activities such as exploratory data analysis pre-modeling, post-hoc testing techniques.

- Sharing information with customers about AI-based decisions in a timely and clear way, and being ready to respond to consumer complaints.

- Implementing policies and procedures to ensure that all business segments (e.g., modelers, business users, IT, risk management, compliance) are aligned with AI guiding principles.

- Maintaining strong oversight and communications with third-party vendors.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the newsletter editors, or the respective authors’ employers.

David Sherwood is a managing director at Deloitte. He can be reached at dsherwood@deloitte.com.

Emily Li, FSA, CERA, MAAA, is a manager at Deloitte. She can be reached at mengrli@deloitte.com.