The Future of Actuarial Models

By Nate Worrell

Actuary of the Future, July 2022

Many actuaries use models to predict the future, but what does the future of predicting the future look like?

To begin, consider the definition of a model from ASOP 56.

“A simplified representation of relationships among real world variables, entities, or events using statistical, financial, economic, mathematical, non-quantitative, or scientific concepts and equations. A model consists of three components: an information input component, which delivers data and assumptions to the model; a processing component, which transforms input into output; and a results component, which translates the output into useful business information.”

For each main part (information, processing, results), this article will reflect on the current state and then attempt to look ahead at things in the near and distant future. The article will be oriented around the life and health insurance sectors, and speculations about the future are meant to be thought provoking and have not been based on any sort of market survey.

Part 1—The Information Component

The information component can be subdivided into three elements: actuarial assumptions, economic assumptions, and data.

1A—Actuarial Assumptions

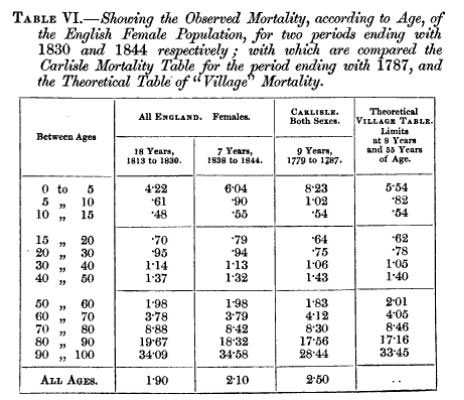

In the 17th century, a haberdasher named John Graunt combed through church records of births and deaths. Since that day, the mortality table has had an impressive run as the core element of life models. Other tabular type assumptions can be found across the actuarial spectrum. Prescribed tables have been the backbone of statutory reserving for all product types. The regs are beginning to give actuaries a bit more freedom in setting assumptions, but these tabular types of inputs haven’t yet gone away.

English Female Mortality. Publishers Charles & Edward Layton, Public domain, via Wikimedia Commons

In the modern era actuaries are adding a bit more sophistication. Dynamic policyholder actions such as selective lapsation or annuity withdrawal behavior make their way into models. The skill set of actuaries expands from applied statistics to behavioral economics.

Additionally, assumptions gain color and depth with trends and sensitivities to provide some way to think about their inherent uncertainty.

In today’s world, actuarial assumptions may require a bit of effort to study, a little more effort to code, but generally, the core actuarial assumptions to a model may be contained in a few tabs of an Excel workbook.

But, where are things headed?

In the near future, in the same way Netflix or Amazon takes an algorithmic approach to figure out what to recommend to you, it may be that the mortality assumption in your model is a function instead of a rate. Whether the equation uses purchasing behavior, wearable devices, or geospatial tracking will result from the way in which the insurance industry adopts the universe of big data and machine learning.

Once again, the knowledge base of the actuary must expand, and it may very well approach the limitations of a singular human mind. As such there will be an increased need on helpers, both human and digital. Assumption management systems will become as critical as the assumptions themselves.

In the far future, imagine continuous, real-time assumptions, with hourly mortality forecasts that are paired with your daily weather. Assumptions may not be static, but fluid. They will range from microscopic to global, an endless well from which actuaries can attempt to glean glimpses of the future.

1B—Economic Assumptions

Where would an actuary be without the time value of money? The discount rate may be the most powerful element of an actuarial model. Yet, in many cases, it is a singular number.

Recent regulation brought in more complexity, factoring in yield curves and deterministic scenarios.

Beyond the discount rate, as insurance products became more market linked and required hedging strategies, the importance of stochastic scenarios emerged. Actuaries had to get comfortable with the difference between risk-free and real-world orientations.

Thankfully, computing power continues to keep pace with modeling demands. Today’s actuary can even solicit a grid of computers in the cloud to perform computations for thousands of scenarios across millions of policies. It may even be the case that at every moment of the day an actuarial computation is happening somewhere.

In 1929, the son of an insurance executive looked out at the stars and observed an increasingly expanding universe. Might Edwin Hubble’s observations on the cosmos also ring true for the future of economic assumptions? Will they continue to expand in complexity at an ever-increasing rate?

In the near future, there will be more requirements to link non-economic events into economic models. Climate-based scenarios are beginning to show up as part of scenario generation. It may also be the case that other narrative-based scenarios come to fruition such as civil unrest, pandemics, and others.

And how will actuaries adopt the emergence of crypto currencies? Do they behave in the same way as a stock, or other currencies?

The far future of economic assumptions will be more of a system than a singular number or set of numbers. It’s hard to look ahead and not see a huge plate of spaghetti, with lots of interconnected threads. With such a complicated ecosystem, it gets easier to miss a risk, or to be caught by surprise. Once again, there may be a need to compliment the human actuaries with robo-actuary programs scrubbing and testing the models.

1C—Data

Both economic and actuarial assumptions rely on data. Historically, data sources are streamlined and simple, with minimal dimensionality. Mortality may vary by age, gender, and tobacco use. The interest rates look to historical yields of a bond and equity portfolio.

Today is the age of machine learning and predictive analytics. Data science both challenges and compliments the analytical work actuaries do. The life sector is slowly catching up to the P&C sector in terms of finding and quantifying regressive elements in the models.

The data that exists now is essentially infinite. We are compiling databases of human DNA. We have devices that track our movements, our sleeping, our breathing, and more. There will likely be continually more smart devices, perhaps even your toothbrush and toilet.

On top of that already enormous pile of data is the continual documentation of the world around us by civilian videographers and the subsequent buzz of chatter occurring on social media.

The near future is very exciting as actuaries mine the data universe and adopt the mantra of a certain starship captain and “boldly go where no actuary has gone before.”

An interesting consequence of studying and learning about various things that seem to indicate higher likelihood of death or sickness is that the insurer may no longer be just an observer. Already there is a migration to be more of an influencer, as insurers try to nudge policyholders to more conducive behaviors.

In the far future, the insurance company may instead by a kind of human psychology lab, with an ability to get real time feedback on various behavioral modification approaches. With such power will come great responsibility, and as such the imperative of a professional, ethical actuary must continue to be a cornerstone of the practice.

Summary

There is an ancient myth that the world is supported on the back of a giant cosmic turtle. What then is the turtle standing on? Why, another turtle of course! And it is turtles all the way down.

Turtles all the way down, on a statue in Savanah Georgia. Photo: Nathan Worrell

It may be that the future of the information layer of actuarial models becomes models on top of models. In other words, models all the way down. However complicated the feeder system of the actuarial model becomes, in order to prepare for the coming flood, actuaries need to prepare for the future today. Develop data and assumption management skills. Build automation and augmentation technologies. Connect with other teams. Actuaries will not survive in isolation.

Part 2—The Processing Component

The history of actuarial computation has a certain elegance that non actuaries may never fully appreciate.

In the early days, the world of commutation functions gave actuaries an alphabet soup to price and value insurance. Cx, Nx, Mx, Dx, Ax and so forth, once developed could simply be referenced with nothing more complicated than a lookup function.

And let us not forget Hewlett-Packard calculators that involved reverse polish notation.

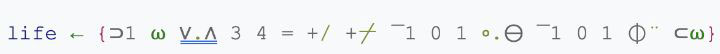

APL came with the beginning of the computing era, pioneered by mathematician Kenneth Iverson, the son of Canadian Farmers. This alien looking syntax resulted in a concise code that could handle multidimensional arrays. The following single line of code (from Wikipedia) takes an input matrix and calculates a new generation according to the “game of life.”

https://en.wikipedia.org/wiki/APL_(programming_language

We are currently transitioning from the age of Excel and VBA to platforms like R and Python for analytical purposes. Hopefully this migration can retain some of the grace of our early days.

Meanwhile, actuarial software systems continue to help actuaries conduct the routine business of valuation, pricing and ALM. Recent trends in regulatory and accounting changes demand more information, more runs, and more tests.

Finding efficiency will become an in-demand skill. Actuaries will need to be proficient in concepts like clustering and proxy models.

As the input layer changes, so will the calculation engine, and so will the output layer. Ultimately, the computing engine will serve more purposes than solving for a number. It will also be an aggregator and communicator. Much like the future actuary cannot remain independent of other teams, the calculation engine must have established connections to input and output interfaces. To use a more manufacturing oriented analogy, the supply chain logistics and distribution channels will be as critical as the assembly and production.

The far future will be linked to the future of computing. Perhaps robots will build and test the code on a quantum chip. Or maybe after you kick off calculations with a blink of your eye, some organic artificial brain in a lab will go to work to synthesize the ocean of calculations. Or maybe you will hook up your own brain to the cloud and resolve questions of risk in some symbiotic way.

The Future Actuary? From the SOA 14th Speculative Fiction Contest. By Nathan Worrell

Part 3—The Results Component

In the infamous moment from Douglas Adams’ Hitchhiker’s Guide to the Galaxy, the super-computer Deep Thought prepares to produce a result after 7+ million years of computing. …

“All right,” said the computer, and settled into silence again. The two men fidgeted. The tension was unbearable.

“You’re really not going to like it,” observed Deep Thought.

“Tell us!”

“All right,” said Deep Thought. “The Answer to the Great Question …”

“Yes!”

“Of Life, the Universe and Everything …” said Deep Thought.

“Yes!” …

“Is …” said Deep Thought, and paused.

“Yes!” …

“Is …”

“Yes!?” …

“Forty-two,” said Deep Thought, with infinite majesty and calm.

42. A number. For much of actuarial work, the final result has numerical representation. A premium. A capital ratio. A reserve balance. All those inputs and all that arithmetic distilled to a few digits. In some ways, it is quite magical. Like combining a smattering of random ingredients together, popping them in an oven, and coming out with a cake.

If producing this fresh baked number is the first leg of the actuarial journey, understanding it becomes the second. This number is a guess, and it is up to the actuary to then examine its robustness. From one number comes many: Sensitivity tests, key performance indicators, variance, durations, correlations, and more.

While charting has been around for some time, the bulk of actuarial analysis is tabular and textual. Digesting the content required some effort.

These days, visualization and business intelligence tools can provide interactive elements to the universe of outputs. Pictures can tell a story a lot quicker than text. They can also easily distort a story if used incorrectly. Deciding where, when, and how to incorporate useful visualization is a muscle that actuaries would be wise to develop.

The numbers will always be there, but take the results to the next level and find the narrative behind the number.

In the current to near future, key takeaways will be provided by computing systems as soon as the results finish. There are already algorithms in deployment that summarize corporate earnings from one period to the next. Though technology can be of assistance, there is still a human element that will make the story more compelling. Part of the actuaries’ job will be to build and deliver compelling stories using their model results.

Like the inputs layer, the output layer also faces the challenge of ever-expanding volumes of data. Synthesizing, storing, and validating such massive quantities of information may require innovative approaches to current practices.

In the far future, what may emerge is an entirely new way to experience results. Current trends in augmented and virtual reality may give you a chance to “walk” through the model results as if they were landscapes in a national park. You could climb peaks and descend through valleys and experience a 360-degree view of the elements that drive your results.

Conclusion

Across the model ecosystem the future of actuarial modeling will be quite different than it looks today.

- Future models will be heavier

- More data in and out

- More calculations at increasing frequencies

- Future models will not exist in isolation and neither will actuaries

- “Models all the way down”

- Connectivity to other systems and areas

- One mind can’t understand it all

- Actuaries will require new skills and technology

- Storage and management of data, assumptions, and results

- Automation of model construction and analysis

- Efficiency techniques

- Uncovering and explaining the narrative behind the numbers

Yet some things will stay the same:

- The world will need objective and trustworthy professionals. This new world will require increased vigilance with other professional standards.

- Data (ASOP 23)—Garbage In still leads to Garbage Out

- Communication (ASOP 41)

- Code of Conduct to ensure ethical algorithms

- Model complexity must be weighed against the principles of parsimony and efficiency. Said differently with the words of George Box—models (by their nature) will always be “wrong,” but how much more “useful” will they become?

Finally, while forward looking, the observations and speculations in this article are still anchored in the world of today. This is a decent approach for the near future. However, consider that John Graunt did not have a computer when he was tabulating death rates. Edwin Hubble had his head in the stars, but was totally earth bound. Kenneth Iverson couldn’t brag about APL on his Twitter account.

The only thing certain about our uncertain future is that it awaits with surprises that will exceed our wildest dreams.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the newsletter editors, or the respective authors’ employers.

Nathan Worrell, FSA, is a client relationship actuary with Moody’s Analytics. He can be contacted at nathan.worrell@moodys.com.