Adapting Credibility Blended Rates to Encompass Machine Learning Predictions

By Jason Reed

Predictive Analytics and Futurism Section Newsletter, April 2021

Credibility theory is one of the most fundamental components of actuarial pricing. Blending a more specific but highly variable estimate (group experience) and a less representative but more stable estimate (manual) is one of the pillars of the actuarial profession. Even long after writing our last exam, actuaries fondly remember the formula:

z*X̅+(1-z)*manual

But does this formula still meet the needs of pricing actuaries in 2021? Advancements in the areas of Big Data and Predictive Modeling suggest new ways to weight multiple estimators. COVID has highlighted that in times of increased uncertainty, simple measures of member months or life years may not adequately reflect the confidence we have in the sample mean. In this article, we suggest why traditional credibility techniques may be insufficient and introduce a few new methods of combining multiple uncertain estimators.

A Refresher on Limited Fluctuation Credibility

Limited fluctuation (LF)credibility contains two important ideas:

- A standard for full credibility that comes from calculating how close (within x percent) the sample mean should be to the true population mean, with high (90–99 percent) probability p; and

-

how much partial credibility, z, should be assigned when this standard is not met, such that the weighted average estimator

z*X̅+(1-z)*manual

has the same variance as X̅ alone would have if the sample mean were fully credible.

For large enough samples, the first statement comes from the Central Limit Theorem, which says that the distribution of the sample mean converges to a Normal distribution. The second statement then follows by equating the variance of zX̅ (the manual is a constant) to the variance of a fully credible sample X̅*. LF credibility has stood the test of time for actuarial pricing, but two new stressors call for new ideas:

- The derivation of the credibility factor z depends on the relative weighing of a random estimator with a non-random standard of truth. It does not allow for the weighing of two estimates of the mean when neither is obviously superior to the other.

- LF credibility does not easily generalize to the weighting of multiple estimates (i.e., prior claims, risk scores and new data mining estimates) that may contain overlapping information.

In addition to these relatively new considerations, LF credibility also suffers from inherent theoretical difficulties:

- Leveraging the Central Limit Theorem and the formula for the variance of a sum to calculate partial credibility does not work when the random variables are not identically distributed.

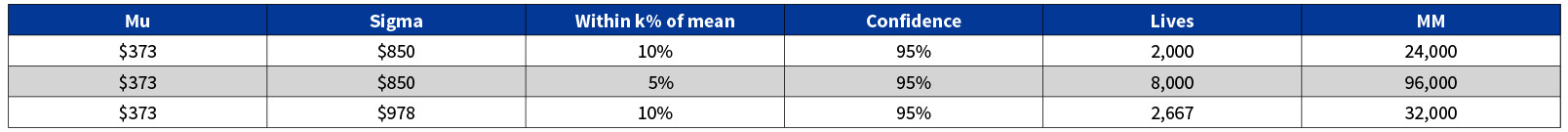

- The estimate of a full credibility standard is highly sensitive to our estimation of the mean and standard deviation. Small measurement errors arising from an outlier group could significantly impact our estimates. Table 1 illustrates the volatility:

Table 1

Estimates Volatility

There are alternatives to LF, such as Buhlmann credibility, but none adequately address the critical need to blend multiple variable estimators. In the subsequent sections we introduce two new ideas for assigning credibility; one is motivated by classical statistics and the other by machine learning.

Semi-partial Correlation

One way to generalize LF credibility is to ask what weights optimize a weighted average estimator of future year claims w1x1+w2x2+···+wnxn. In the case of n=2, x1= trended prior claims, x2=manual, we have the classical credibility problem with w1=z and w2=1-z. We could solve for w1,w2 by minimizing the mean squared error of the estimator, which would imply inversely weighting each estimator proportional to its variance.

But looking at variance alone is too crude when the estimators are correlated. We do not want to count information common to estimators x1, x2 twice. For example, this happens when claims and risk scores are naïvely weighted despite being positively correlated and derived from diagnosis codes. We would like an estimate of credibility that is proportional to the information that each estimator provides and does not double-count information they have in common. This is particularly important when blending both estimators with a manual rate, where we need to be careful not to double-count multiple experience-based predictions and assign too much weight to group experience.

Semi-partial credibility is a statistical technique that calculates the impact of x1 on future year claims after factoring out the impact of x2. It is derived from looking at the correlation of future year claims with residual information that is not already explained after prediction using all of the information in x1.

Shapley Statistic

The Shapley statistic is actually an idea from game theory. It imagines a situation where N players participate in game with total payout φ(N). Each player makes a contribution towards the game’s objective. The Shapley statistic attempts to divvy up this total payout in a way that is “fair” to all players, meaning that each receives a payout proportional to his or her contribution. The way to calculate that contribution is to measure the marginal contribution made by each player when he is added to various coalitions of other team members. Then, the reward that player receives is proportional to a weighted average of these contributions. In the context of looking at estimators of future year claims, the game is to reduce Mean Squared Error (MSE) across a block of groups. Each predictor of future year claims makes a contribution quantified by how much MSE is reduced when that predictor is included in a model where some collection of the other predictors already exists. In the context of our example, let’s see how to assign credibility to prior claims in a new pricing model where the predictors are prior claims, risk scores and a gradient boosting machine (GBM). The possible contributions of prior claims are:

MSE(prior claims) – MSE(manual rate);

MSE(risk score, prior claims) – MSE(risk score);

MSE(GBM, prior claims) – MSE(GBM); and

MSE(GBM, risk score, prior claims) – MSE(GBM, risk score).

A weighted average of these improvements gives the contribution of prior claims towards the game of reducing MSE. We would then compare that to the contribution of the other players (GBM and risk scores) in order to assign credibility to each player.

Conclusion

To avoid anti-selection and adapt to new pricing regimes, insurers will have to learn to incorporate information from cutting edge predictive models. Rather than trusting the black box completely, it is wise to consider new estimates together with traditional ones. To do so, we must expand our thinking about credibility to encompass the proper weighting of multiple estimates according to their contribution to pricing accuracy. Statistical methods like semi-partial correlation and machine learning algorithms like Shapley are two methods actuaries can consider to improve blending for partially credible groups.

Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries, the editors, or the respective authors’ employers.

Jason Reed, FSA, MS, MAAA is a senior director of Advanced Analytics at Optum. He can be contacted at r.jason.reed@optum.com.